As I was working on my deep learning projects, I faced a problem when PyTorch couldn’t use my computer’s graphics card. This made everything slower. Even after trying different fixes, nothing worked until I found out that a small setting was causing the issue.

To fix “torch is not able to use GPU”, ensure CUDA_VISIBLE_DEVICES is set to the correct value (usually 0) and remove any conflicting variables. This simple adjustment can resolve the issue.”

In this article, we’ll discuss a common problem in deep learning: when PyTorch can’t use your computer’s graphics card (GPU). We’ll figure out why this happens and give you simple steps to fix it.

What Is The Common Issue Known As “Torch Is Not Able To Use GPU” – Detailed Answer Here!

As I mentioned above, the torch is not able to use GPU” This typically refers to a situation in deep learning where the PyTorch library, commonly known as “Torch,” encounters issues accessing the Graphics Processing Unit (GPU) on a computer.

in simple words, this problem can make it harder for PyTorch to use your computer’s graphics card (GPU), which is important for making deep learning tasks faster. You might notice that things run slower or that big models don’t train well.

Must Check This Out: Can I Bring A GPU On A Plane? – 2024 Rules!

Technically, how to check if GPU is available pytorch?

To check if a GPU is available in PyTorch, you can use the following code snippet:

if torch.cuda.is_available():

# Get the number of available GPUs

gpu_count = torch.cuda.device_count()

print(f”GPU is available with {gpu_count} CUDA device(s).”)

else:

print(“GPU is not available. PyTorch will use CPU.”)

This code snippet first imports PyTorch, then checks if CUDA (GPU support) is available using torch.cuda.is_available(). If CUDA is available, it prints the number of CUDA devices (GPUs) using torch.cuda.device_count(). If CUDA is not available, it prints a message indicating that PyTorch will use the CPU.

This is a quick and reliable way to determine if a GPU is available for use with PyTorch.

Why Does “Torch Is Not Able To Use GPU” Happen – Find The reasons Behind!

This happens when there are obstacles preventing PyTorch from accessing the computer’s GPU. Some common reasons include outdated GPU drivers, incorrect configurations, or conflicts with other software. When these issues arise, PyTorch defaults to using the CPU instead of the GPU, leading to slower performance in deep learning tasks. Let’s have a detailed look into it:

Outdated GPU Drivers

One of the most common reasons Torch may not detect your GPU is outdated drivers. GPU drivers need to be up-to-date to ensure compatibility with Torch and other machine learning libraries. Using an older driver version might lead to detection issues and reduced performance.

Incompatible Hardware

Another potential reason for Torch not detecting your GPU is hardware incompatibility. Some older GPUs or less common models might not be fully supported by the latest versions of Torch and CUDA. Checking the compatibility of your GPU with the Torch version you’re using is important to ensure proper detection and functionality.

CUDA Toolkit Mismatch

Torch relies on the CUDA toolkit for GPU acceleration. If there’s a mismatch between the version of the CUDA toolkit installed and the version supported by Torch, the GPU may not be detected. Ensuring that both Torch and the CUDA toolkit are compatible is crucial for proper GPU utilization.

Incorrect Build Installation

Incorrect or incomplete installation of Torch or its dependencies can also prevent GPU detection. This includes improperly configured environment variables or missing components required for Torch to interface with the GPU. Verifying the installation steps and ensuring all necessary components are installed can resolve these issues.

Last But Not Least, Permissions Issues

Permissions issues can arise if Torch does not have the necessary access rights to utilize the GPU. This can occur in multi-user systems or environments with restricted access settings. Ensuring Torch has the correct permissions to access the GPU hardware is essential for detection and usage.

Do You Know? Can I Use a CPU Cable For GPU? – Warning!

How Can I Troubleshoot The Issue Of “Torch Is Not Able To Use GPU” – Step-By-Step Guide!

To fix the issue of “torch is not able to use GPU;” you can try the following steps:

1. Check GPU Availability:

- Make sure your computer has a GPU.

- Use torch.cuda.is_available() to check if PyTorch can see it.

- Check GPU memory with Nvidia-semi.

2. Verify CUDA Toolkit:

- PyTorch needs CUDA for GPU support.

- Install the right CUDA version for your PyTorch.

- Update or reinstall the CUDA Toolkit if needed.

3. Review PyTorch Installation:

- Reinstall or update PyTorch.

- Check it’s installed in the right place.

4. Environmental Variables:

- Look for CUDA_VISIBLE_DEVICES.

- Make sure it’s set correctly (e.g., CUDA_VISIBLE_DEVICES=0).

- Remove any conflicting variables.

5. Code Check:

- Review your code.

- Ensure you’re sending tensors and models to the GPU correctly.

6. Driver Problems:

- Old or wrong GPU drivers can mess things up.

- Update your GPU drivers to the latest version.

7. Runtime Selection:

- If you’re using platforms like Google Colab, choose GPU as the runtime.

8. Memory Issues:

- If your GPU memory is full, PyTorch might use the CPU.

- Keep an eye on GPU memory usage and manage it wisely.

Also Read: Do Both Monitors Need To Be Connected To The GPU? – My Strategy!

What Are Some Common Signs That Indicate the Torch Not Able To Use GPU?

When PyTorch can’t use the computer’s graphics card (GPU), you might notice a few things. Tasks that should be fast might be really slow. Sometimes, you might see error messages talking about the GPU or how PyTorch can’t use it.

You can also check with a command called `torch.cuda.is_available()`— if it says `False`, that means PyTorch can’t find or torch is not able to use GPU;

Another sign is that PyTorch ends up using the computer’s regular processor (CPU) instead of the GPU. And if you’re trying to train big models, you might run into problems because there’s not enough memory on the GPU. These are all signs that PyTorch isn’t able to use the GPU as it should.

Must Read: How Much GPU Utilization Is Normal? – Do This Rightly!

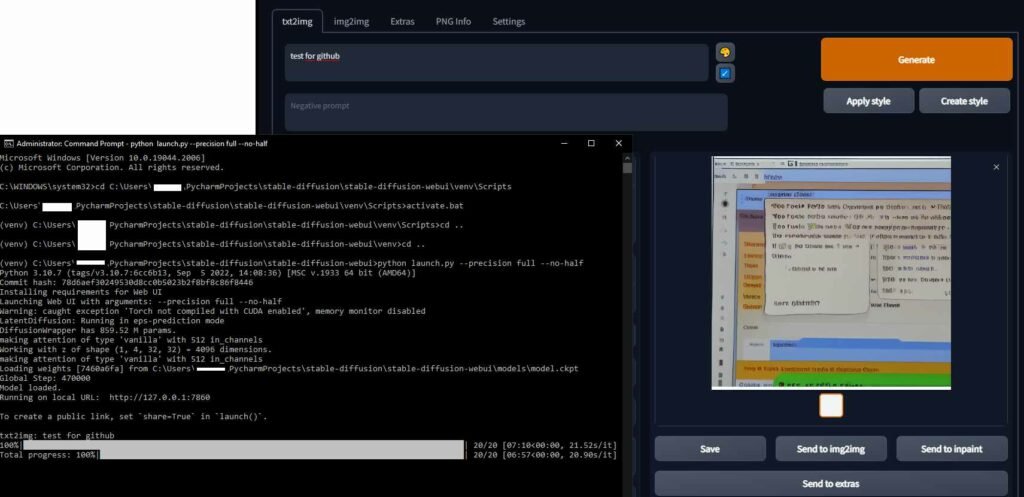

What Role Does The CUDA Toolkit Play In Enabling GPU Support For PyTorch?

The CUDA Toolkit is really important for PyTorch to use the GPU. It’s like a toolbox that PyTorch needs to talk to the GPU and use its power. When you install the CUDA Toolkit, it sets up everything PyTorch needs to do its GPU magic. So, without the CUDA Toolkit, PyTorch can’t use the GPU properly.

Also Read: What Is Normal GPU Usage While Gaming?

Can You Use Pytorch On A Machine Without A GPU – Don’t Miss Out!

Yes, you can still use PyTorch on a machine without a GPU. PyTorch is designed to work on both CPUs and GPUs, so it can still perform deep learning tasks on a CPU-only machine. While using a GPU can significantly speed up computations, especially for large models and datasets, PyTorch’s CPU support allows for development and experimentation even without dedicated GPU hardware.

Are There Any Disadvantages To Using PyTorch On CPU Instead Of GPU?

Slower Performance: Deep learning tasks typically run much slower on a CPU compared to a GPU. This means training and inference times can be significantly longer when using only a CPU.

Limited Parallelism: GPUs are optimized for parallel computation, allowing them to handle many tasks At a time. CPUs have fewer cores and less parallel processing power, which can limit their ability to efficiently process deep learning operations.

Memory Constraints: GPUs typically have more memory than CPUs, allowing them to handle larger datasets and models. Using a CPU-only setup may result in memory limitations, especially when working with large neural networks or datasets.

Reduced Training Capacity: With slower performance and limited parallelism, CPU-only setups may not be suitable for training large and complex models effectively. Training on a CPU may also restrict the size and complexity of models that can be trained due to memory constraints.

Overall, while PyTorch can run on CPUs, using a GPU for deep learning tasks offers significant advantages in terms of performance, parallelism, and memory capacity.

Read Also: Is 80 Degrees Celsius Hot For A GPU? Attention, All gamers!

Frequently Asked Questions:

Why is PyTorch not detecting my GPU?

PyTorch might not see your graphics card if it’s old or if there’s a problem with how it’s set up. Make sure your graphics card drivers are up to date and that everything is set up correctly.

How can I check if PyTorch is using my GPU?

You can check if PyTorch is using your graphics card by asking it with a special command. You can also use a tool called Nvidia-semi to see if PyTorch is using your graphics card when it’s working.

Does Torch support GPU acceleration?

Yes, Torch supports GPU acceleration, allowing for significantly faster computation on compatible hardware. This is achieved through integration with CUDA, enabling efficient use of NVIDIA GPUs.

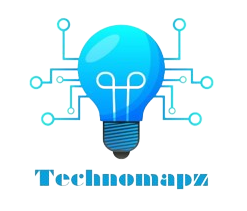

runtimeerror: torch is not able to use GPU

The “RuntimeError: Torch is not able to use GPU” error typically occurs due to outdated GPU drivers, an incompatible CUDA toolkit version, or incorrect installation of Torch. Updating GPU drivers, ensuring CUDA compatibility, and verifying Torch installation with proper environment configurations can resolve this issue.

What should I do if PyTorch is running slower than expected on my GPU?

Sometimes PyTorch might run slowly on your graphics card because it’s trying to do too much at once, or because your graphics card isn’t powerful enough. You can try making things simpler or upgrading your graphics card to make it faster.

Can I train deep learning models with PyTorch on a CPU-only machine?

Yes, PyTorch can run on CPU-only machines, but training deep learning models on CPUs may be slower compared to using GPUs. However, for smaller models and datasets, or for experimentation and development purposes, training on a CPU-only machine is still feasible.

Why am I getting out-of-memory errors when training with PyTorch on my GPU?

PyTorch might give you an error about running out of memory if your graphics card doesn’t have enough memory to do what you’re asking it to do. You can try doing less at once or getting a graphics card with more memory to fix this.

How to solve “Torch is not able to use GPU”error?

To solve the “Torch is not able to use GPU” error, ensure your GPU drivers and CUDA toolkit are up-to-date and compatible with your Torch version. Additionally, verify the correct installation of Torch and its dependencies, and check for proper permissions to access the GPU hardware.

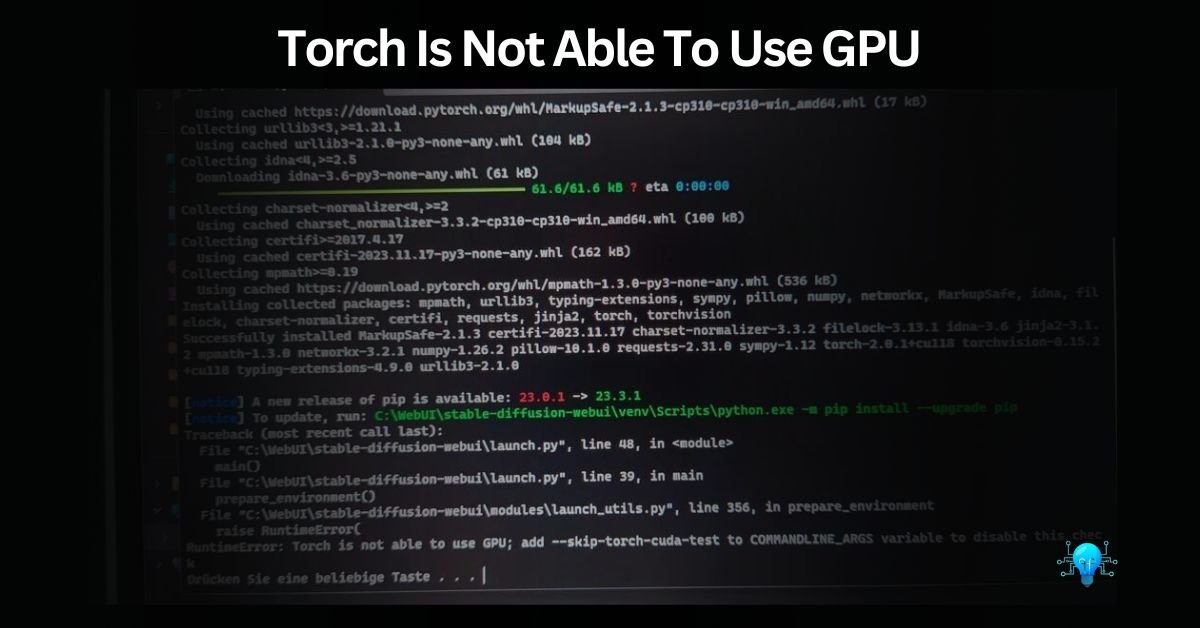

What Does “torch is not able to use GPU; add –skip-torch-cuda-test to commandline_args variable to disable this check” means?

To bypass the “torch is not able to use GPU” error and disable the GPU check, add --skip-torch-cuda-test to the commandline_args variable. This parameter instructs Torch to skip the CUDA test during initialization, allowing you to proceed with CPU execution if needed.

Conclusion:

If you’re having trouble torch is not able to use gpu don’t worry! By checking that your GPU drivers are updated and the CUDA Toolkit is installed correctly, you can fix many problems.

Also, make sure your code is optimized for the GPU, and keep an eye on how much memory your GPU is using. With these steps, you’ll be able to make the most of PyTorch’s power and speed up your deep-learning tasks.