Hey There, Fellow Deep Learner!

Ever wondered how to make your Computer work extra hard for those cool deep-learning projects you’re building with PyTorch? Well, hold on a second! Before you jump into training some super-complex models, there’s one quick thing to check: does your computer have a special graphics card (GPU) that PyTorch can use?

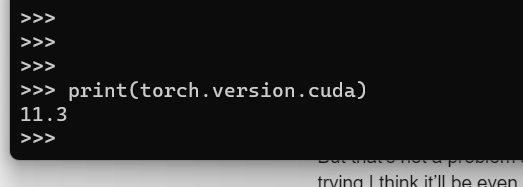

PyTorch offers a simple way to see if your computer has a graphics card (GPU) it can use for faster deep learning. Just run this code: import torch; print(torch.cuda.is_available()). If it says True, you’re good to go!

So, let’s get you set up to check if your GPU is ready to rock and roll with PyTorch!

What Is Meant By Pytorch Check for GPU? – Clear It Out First!

In PyTorch, we can make our deep learning projects run faster by tapping into the power of GPUs, which are like supercharged engines for computations. Checking for GPU availability is simply making sure that we can use them. By doing this, we can speed up tasks like training our models. It’s like making sure we have a turbo boost available when we need it!

We can easily check for GPU using PyTorch’s torch.cuda.is_available() function. If it’s available, great! We use it. If not, no worries, we can still get the job done with the CPU. This simple step ensures we’re making the most of our hardware, making our projects run smoother and faster.

When we talk about “checking for GPU” in PyTorch, we’re referring to a simple yet pivotal step in ensuring that your code can leverage the computational prowess of a GPU if available. Essentially, it involves determining whether a GPU is accessible and ready to be utilized for computations. Here’s why this step is important:

1. Performance Boost: GPUs are specifically designed to handle the kinds of parallel computations that are inherent in deep learning tasks. Utilizing a GPU can lead to significant speedups during both the training and inference phases of your models.

2. Resource Management: In scenarios where multiple GPUs are available, PyTorch allows you to distribute your computations across these devices, maximizing efficiency and throughput.

Must Check: Can I Bring A GPU On A Plane? – 2024 Rules!

How To Check If Gpu Is Available Pytorch? – Check If Gpu Is Available Pytorch!

Sure, checking if a GPU is available in PyTorch is important for making the most of your hardware. It’s like seeing if you have a high-performance engine (the GPU) in your car. Here’s how you can do it:

import torch

# Check if GPU is available

if torch.cuda.is_available():

print(“Great news! You have a GPU!”)

else:

print(“Oops! No GPU found. But don’t worry, we’ll use the CPU.”)

GPU is not available for pytorch – What To Do?

If a GPU is not available for PyTorch, you can still use your CPU for computations, but you might experience slower performance.

- If a GPU is unavailable for PyTorch, the framework automatically falls back to using the CPU for computations.

- CPUs are generally slower for deep learning tasks compared to GPUs due to fewer processing cores optimized for parallel computations.

- While running on a CPU, tasks may take longer to complete compared to running on a GPU.

- PyTorch’s flexibility allows seamless switching between GPU and CPU, ensuring your code runs regardless of hardware availability.

- You can continue developing and experimenting with deep learning models using your CPU until access to a GPU becomes available for improved performance.

Is it possible to check if GPU is available without using deep learning packages like TF or torch?

Yes, it’s possible to check GPU availability without deep-learning packages.

You can use system-level tools like NVIDIA’s CUDA Toolkit or libraries like Pycuda to check GPU availability without relying on deep learning frameworks. These tools provide functionalities to query the system for GPU presence and specifications, allowing you to determine GPU availability for any computation, not just deep learning tasks.

What If Torch Is Not Able To Use GPU?

If Torch is unable to use the GPU, it will fall back to using the CPU, which may significantly slow down the performance of deep learning tasks. For a detailed guide on troubleshooting and resolving this issue, please refer to the full guide linked here.

Do You Know? Do Both Monitors Need To Be Connected To The GPU? – My Strategy!

How can I check if PyTorch is using the GPU?

You can check if PyTorch is using the GPU by examining the device that tensors and models are placed on during computation.

- Check the device of tensors or models in PyTorch using the .device attribute.

- If the .device attribute shows a GPU device (e.g., cuda:0), PyTorch is using the GPU.

- If the .device attribute shows a CPU device (e.g., cpu), PyTorch is using the CPU.

- Inspect the device of tensors or models programmatically to determine if PyTorch is using the GPU.

Programmatically check if PyTorch is using a GPU:

You can programmatically check if PyTorch is using a GPU by examining the device of tensors or models using the .device attribute.

In PyTorch, you can check if it’s using the GPU or CPU by looking at the .device attribute of tensors or models. If it says cuda:0, PyTorch is using the GPU.

But if it says CPU, it’s using the CPU. So, by checking this attribute, you can easily tell whether PyTorch is running computations on the GPU or CPU.

Do You Know? CPU Intensive Or GPU Intensive – What Does It Mean?

Some Other Factors To Consider:

PyTorch checks if GPU is available on Mac

On a Mac, you can check if a GPU is available for PyTorch by using the same method as on other systems.

You can use torch.cuda.is_available() to determine if a GPU is accessible. However, Mac systems typically have integrated GPUs, which might not offer the same level of performance as dedicated GPUs found in other systems.

Torch.cuda.is_available() returns False

If torch.cuda.is_available() returns False, it means PyTorch couldn’t find an available GPU.

Yes! When torch.cuda.is_available() returns False, which indicates that PyTorch couldn’t detect a GPU on your system. This could be due to various reasons such as missing GPU drivers, incompatible CUDA versions, or hardware limitations.

Using GPU in your PyTorch code

To use the GPU in PyTorch, ensure it’s available and move tensors and models to the GPU device using .to(‘cuda’).

To use the GPU in PyTorch, you need to make sure your tensors and models are on the GPU. You can do this by either moving them to the GPU using the .to() method or by directly creating them on the GPU. This enables PyTorch to perform computations on the GPU, resulting in faster execution of your code.

However, it’s important to note that not all operations are supported on the GPU, so you may need to move data back to the CPU for certain tasks.

Overall, utilizing the GPU can significantly accelerate your PyTorch code, especially for computationally intensive tasks like training deep learning models.

Read Also: How Much GPU Utilization Is Normal? – Do This Rightly!

How to check if GPU is available in Jupyter Notebook

In a Jupyter Notebook, GPU availability can be checked using torch.cuda.is_available() just like in any Python script. If it returns True, you can proceed to use the GPU in your notebook for accelerated computations. If False, you’ll have to rely on the CPU for computation.

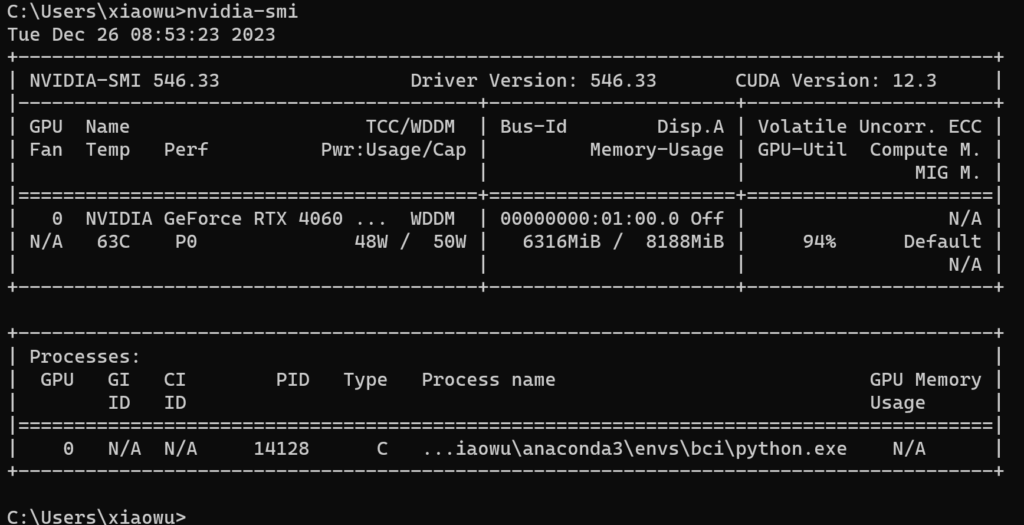

Additionally, you can check the GPU memory usage and specifications using system monitoring tools or libraries like Nvidia-smi or psutil. Simply follow the guidelines we’ve mentioned below!

- Import torch library.

- Use torch. cuda.is_available() function.

- If it returns True, GPU is available.

- If it returns False, GPU is not available.

Frequently Asked Questions:

How do I know if my GPU is available in Python?

You can check GPU availability in Python using libraries like torch or tensorflow by calling their respective functions, such as torch.cuda.is_available() or tensorflow.config.list_physical_devices(‘GPU’).

How do I check if the CUDA device is available in Torch?

To check if a CUDA device is available in Torch, use Torch. cuda.is_available() function. If it returns True, a CUDA device is available.

How to find the NVIDIA GPU IDs for the PyTorch CUDA run setup?

In order to find NVIDIA GPU IDs for PyTorch CUDA run setup, use the Nvidia-same command in the terminal. It displays information about NVIDIA GPUs installed on your system, including their IDs.

Is Keras using the GPU?

To check if Keras is using the GPU, you can use TensorFlow.config.list_physical_devices(‘GPU’) in the TensorFlow backend. If it returns a GPU device, Keras is using the GPU for computations.

Summing Up The Discussion:

To check if a GPU is available in PyTorch, use the torch.cuda.is_available() function. If it returns True, a GPU is available; otherwise, it returns False, indicating no GPU is available.

Check your system and then follow the guidelines we have described here in detail for you guys!